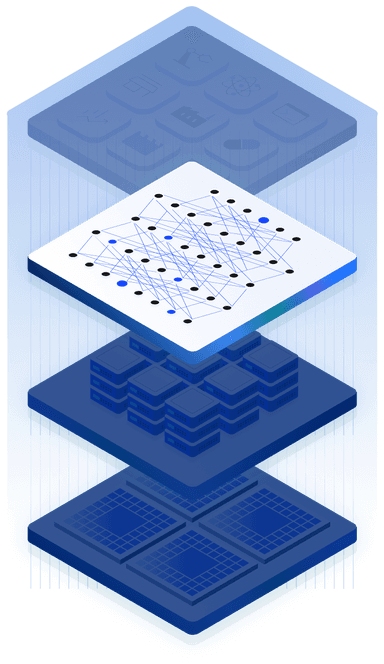

AI—and the generative foundation models such as large language models (LLMs) that power it—are becoming indispensable in industry and daily life. Yet most are developed outside Japan and are not optimized for Japanese language, culture, or business practices.

Preferred Networks (PFN) developed PLaMo™ (pronounced plah-mo")

a Japan-made large language model built entirely from scratch and trained on data rich in Japanese knowledge. PLaMo™ delivers advanced Japanese understanding and generation capabilities

available via cloud API

Amazon Bedrock Marketplace

and on-premises deployments. PFN is also developing specialized models for fields such as finance

healthcare

and translation."

PLaMo™ (pronounced "plah-mo") is a Japan-made large language model (LLM) developed fully from scratch by PFN. Trained on a high-quality training dataset built by PFN and rich in Japanese text, PLaMo™ achieves world-class performance in Japanese language understanding and generation.

PLaMo Prime is the flagship model with world-class Japanese-language performance and a compact model size, available through the cloud-based PLaMo API (paid service) and the assistant chat service PLaMo Chat (currently offering free trial access during the launch campaign). PLaMo Prime is also offered in on-premises environment through Amazon Bedrock Marketplace, and is built-in by default in third-party platforms such as miibo (Japanese AI building platform), Tachyon Generative AI (corporate AI service), and QommonsAI (used by over 150 local governments in Japan).

A small language model (SLM) developed based on the outcomes of PLaMo Prime. Despite its compact size, it delivers Japanese-language performance comparable to large language models (LLMs) and can operate without cloud connectivity on edge devices—including automobiles, robots, manufacturing equipment, and PCs—as well as in on-premises environments.

PLaMo Translate is a large-scale language model (LLM) developed fully from scratch by PFN with specialized focus on text translation to and from Japanese. The model has been trained on a unique dataset containing a high proportion of both Japanese and English texts to achieve superior quality and accuracy in translations between Japanese and English compared to foreign models. PLaMo Translate features a compact size and highly efficient computational design, making it suitable for on-premise or local deployment. It also provides a browser extension for convenient use.

PLaMo-fin-prime is a finance-specialized version of PLaMo™, fine-tuned with Japanese financial knowledge and further aligned through additional training to better reflect user intent. The model is designed to enhance practicality in financial tasks that require domain-specific expertise. PFN offers a customization service that builds AI models out of PLaMo-fin-prime for each financial institution. These customized models can be deployed in secure, on-premises environments—meeting the security requirements of financial institutions—and can be further trained on each institution’s proprietary data, product information, workflows, and internal knowledge. This approach enables users to safely leverage confidential data while improving the efficiency of specialized operations and enhancing customer service quality.

PFN has released some PLaMo models—developed through the GENIAC project—as well as other LLMs fine-tuned on additional data, as open models.

GENIAC (Generative AI Accelerator Challenge) is a project led by Japan’s Ministry of Economy, Trade and Industry (METI) and the New Energy and Industrial Technology Development Organization (NEDO) to strengthen Japan’s capabilities in developing foundation models for generative AI.