News

PFN Releases PLaMo-13B Open-Source Large Language Model in Japanese and English for Research and Commercial Use

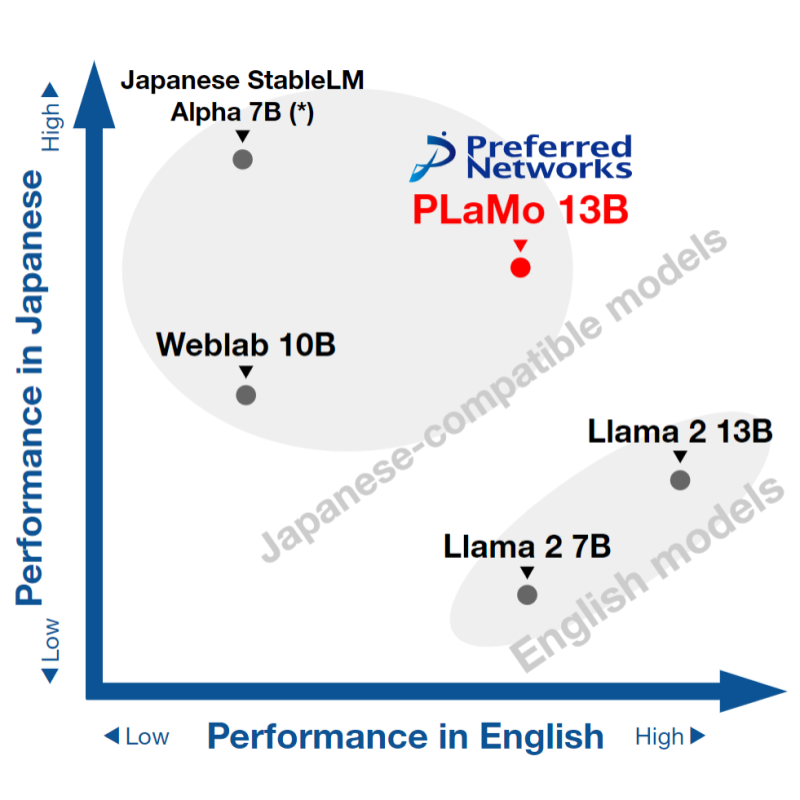

New 13 billion-parameter pre-trained model shows one of world’s highest performances in Japanese and English combined

2023.09.28

TOKYO – September 28, 2023 – Preferred Networks, Inc. (PFN) today released PLaMo™-13B (Preferred Language Model), a 13 billion-parameter pre-trained large language model (LLM), under an open source software license that permits research and commercial use at https://huggingface.co/pfnet/plamo-13b. PLaMo-13B has demonstrated one of the world’s highest performances on the common lm-evaluation-harness benchmark for Japanese and English combined among currently available pre-trained models with similar parameter sizes.

Performance comparison in Japanese and English

Each plot shows the average deviation of benchmark scores in both languages.

Each plot shows the average deviation of benchmark scores in both languages.

*For a fair comparison, PFN has re-evaluated the model and adopted a higher score

than the one published by the developer.

A new category of artificial intelligence (AI) systems known as foundation models, such as large language models and diffusion models, have recently been adopted in a range of domains and delivered remarkable results. Anticipating that foundation models will boost innovation across industries, PFN has been closely studying them and started building LLMs beginning spring 2023.

PlaMo-13B

To develop PLaMo-13B, PFN has collected and processed open data publicly available for training AI models to build a 1.4 trillion-token dataset in Japanese and English. PFN used 480 NVIDIA A100 GPUs provided by Japan’s National Institute of Advanced Industrial Science and Technology through its AI Bridging Cloud Infrastructure (ABCI) to train the model for nearly one month. PFN kept the parameter size compact at 13 billion by augmenting the training dataset in English and Japanese, allowing PLaMo-13B users to run the LLM on one standard GPU server but still benefit from one of the world’s highest performances for Japanese and English combined.

PFN has released codes and parameters required for inferences on PLaMo-13B under the Apache License v2.0 which permits research and commercial use for free, in a bid to help many users conduct research and tests using the LLM that achieves high performance in Japanese and English combined.

New subsidiary for multi-modal foundation model

PFN plans to establish a new subsidiary Preferred Elements, Inc. (PFE) through a company split on November 1, 2023 to boost the development of a high-performance multi-modal foundation model that can process text, images, audio, video, spatial information, genome, sensor values and other various forms of data. PFN’s Chief Executive Researcher Daisuke Okanohara will also serve as the president of PFE.

PFE will develop a multi-modal foundation model that is far more large-scale and functional than PFN’s new LLM, and aims to launch it commercially in 2024 while applying it to products and services provided by PFN group companies and their partners. PFN has accumulated practical experience and knowledge for LLM development at PFE including collection and processing of pre-training data, and pre-training of LLMs using large computing infrastructure.

Going forward, PFE will work with the government, educational institutions and private companies to collect and develop pre-training data while addressing known LLM issues such as hallucination, bias and misuse to ensure that its foundation model will empower industries and society as a safe and reliable infrastructure.

PFN at CEATEC Japan 2023

PFN will set up a booth at CEATEC Japan 2023 which is to be held between Tuesday, October 17 and Friday, October 20, 2023 at Makuhari Messe, Chiba, Japan, where the company will demonstrate how it plans to apply LLMs including PLaMo-13B to 3D model generation, materials simulation, robot control and more.

At the PFN booth (A121, Advanced Technology Area, Hall 8), PFN members will demonstrate how PFN products will work with LLMs in the future and give presentations on the stage on the technological advancement it envisions. PFN will also exhibit an actual server equipped with the MN-Core™ AI processor as well as MN-Core 2, the latest in the series.